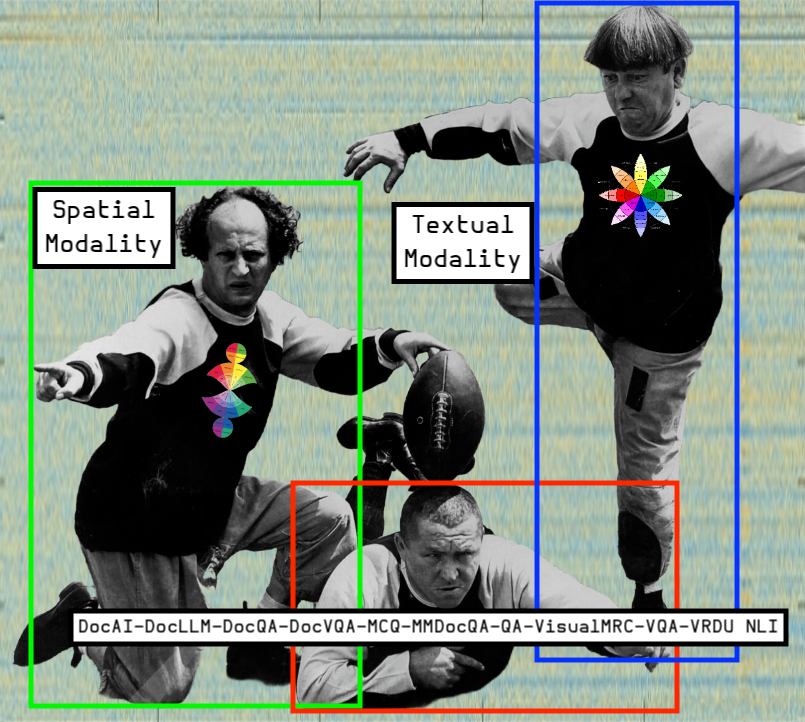

Simply augmenting the text with bounding box information via additive positional encoding may not capture the intricate relationships between text semantics and spatial layout, especially for visually rich documents.

[DocLLM: A layout-aware generative language model for multimodal document understanding

You must be aware of one problem with Semantic Caches: sentences with opposite meanings might have high semantic textual similarity.

Anonymous

Why limit yourself to nouns and bounding boxes? Throw in some relative clauses and go for the fill tool!